Reading Time: 12 minutes

Reading Time: 12 minutes

This is a ramble from which to launch ideas for an assignment. You can pass on it if you like. I’m scoping an app for an assignment.

Assignment

From the off, this project must meet set assignment criterion and inventing a new app isn’t one of them. A new app simply provides the vehicle on which to pin accessibility principles.

The app isn’t the important part. We’re looking at designing an interface for a specific user group with a psychological, cognitive, emotional, or physical challenge.

I am only disappointed the assignment is all visual design. There’s more to digital than looking at it.

Student Magic

We do have support of some “Student Magic”; there’s no budget constraint, we can make wild assumptions about technology and collaboration between often uncooperative brands, and we are not developers or engineers – that’s someone else’s problem. It’s all visual and “interaction”. Easy?

The problem

We all lose “stuff” in our homes or at work; keys, devices, and then our minds. With good or even assisted vision, we can search for our stuff – turning the place upside down before our Spouse tells us where they tidied the stuff to.

What if we do not have sufficient visual acuity?

The project intends to marry the utility of tile (Bluetooth enabled locator tabs that emit audio on demand from an app) with indoor tracking technologies to assist anyone with:

- Guidance to locations at home, at work, at school/college (with an aim to assist those new to visual loss?)

- Finding stuff (that would help me)

Disappointingly, I am not the first person to consider this – go figure!

Encouragingly, the use of smartphone technology considered is contemporary and up to date with concepts you may find searching on the Internet.

Problem statement:

As a recently visually impaired person, I want support adapting to my indoor spaces such as when locating rooms or items so I can feel less dependent on others.

Business case

Low vision, visually impaired, partially sighted, legally blind (and others) are terms used interchangeably with blind. Blindness is best defined functionally or sociologically and not physically or medically (Jernigan, 2005).

Fighting Blindness (n.d.) highlight 246,773 people in Ireland and 285 million people World wide are affected by genetic, age-related, and degenerative conditions affecting sight. Ireland has 95,000 households (Central Statistic Office, n.d.). We can generalise: 2.5 people per household.

The National Council for the Blind Ireland (NCBI, n.d.-a.) add more context. They provide their service to over 8,000 people every year:

- 2,000 are first-time contacts

- 95% of people accessing NCBI services have some degree of useful vision

- Less than 5% are totally blind.

This suggests 1,900 new approaches of partially sighted people each year and 100 who are totally blind.

And commercially thinking, with 10 million blind or visually impaired Americans increasing by 75,000 per year (CNBC, 2017), there is a clear need to access a cost-effective assistive adjunct in English and Spanish. Add the possibilities of internationalisation and localisation World-wide, and the segment’s commercial possibilities make for an attractive Return on Investment?

Registered blind

The UK’s Royal National Institute of Blind People (RNIB, n.d.) states one does not need to be in total darkness to be Registered Blind: only partially sighted.

By example, an person Registered Blind may see from both eyes, only they can’t see with both eyes together: that and myopia, hyperopia, astigmatism, and occasional bloody-mindedness.

The HSE website (Health Service Executive, 2018.) lists requirements for applicants to the Blind Welfare Allowance.

With aids, and without formal assistive technologies (AT), the blind are able to shop for their groceries independently online using a standard PC; pressing their nose to the monitor (following homemade scribbled instructions and cussing like a trooper in my 90-year-old Dad’s case).

Not registered blind

Anyone can have a visual impairment and not be, “blind”. A visual impairment is simply a decreased ability to see that glasses cannot correct.

For example, colour blindness, which is described by the Health Service Executive (HSE) (n.d.), and affects 8% of men and 0.5% of women in the World (approximately 300 million people), and at least 3 million people in the UK are colour blind (4.5%). (Colour Blind Awareness, n.d.).

It’s everyone!

And then there are tired eyes, ill-prescribed (or misplaced!) glasses, eye infections, injuries, and all manner of factors contributing to visual deficiencies when compared to someone with 20/20 vision.

These include environmental factors too, such as light or reflections on screens or lowering contrasts when shone in the eyes, poor screen positioning, vibration and movement, fractured displays, debris, dirt, fingermarks – children, dog snot, or cat paw prints!

We all need good, inclusive visual design to get us through life with our apps and websites.

Exploiting universal design

I am not a developer as proved on my hand-coded parent site . It’s essentially an HTML prototype – I design for digital so I believe I should at least understand it.I profess accessible design and I’m yet to get good at it. The site has has poor colour contrast and links that open in new tabs/windows, etc. I am not the expert.

And if I am not the expert and can cobble all the good stuff that works well on my website – and there is plenty, then a dedicated developer can only do a better job of it? For example, Microsoft’s Office 365 has implemented an array of AT-compatible features to the requirements of EN 301 549, WCAG 2.0 AA and US Section 508. (Microsoft, n.d.).

Keynote is that inclusive or universal design does not stop at the presentation layer! There’s more to the web than looking at it.

It’s not all about vision

Accessibility is not all about vision. Our cognitive, physical, and emotional ability and condition each affect how we access digital content.

“Web accessibility also benefits people without disabilities. For example, a key principle of Web accessibility is designing Web sites and software that are flexible to meet different user needs, preferences, and situations.”

World Wide Web Consortium (2005).

Device and browsing accessibility adjuncts

Thankfully, assistive technologies (AT) do well to cover many design and development “oversights”. Our users can access content audibly, by updating the presentation contrast, font sizes, etc. and using custom input strategies. (Find out more from AbilityNet (n.d.)).

Often these accessibility adjuncts are built in to devices or browsers. Often not. We cannot rely on the AT to undo our poor work. They need our support. And there is plenty we can give very easily by:

- Writing HTML semantically.

- Using HTML for content, CSS for presentation, and JavaScript (JS) for functionality (albeit there is cross over between CSS and JS and functionality, which is fine if it degrades gracefully).

- Designing “mobile first” fluid-responsive presentation.

- Adding informative micro-data and element attributes to our HTML.

- Not relying on JS, where possible. Let the site work for non-JS browsers.

- Add alternative content (alt and longdescription, by example, or dedicated alternative pages to describe the content of learning objects or video.)

These are off the top of my head. It seems a long list already? The thing is, I’m an idiot and know and implement these techniques and strategies by habit. A developer? It’s their bag. They know this.

And still the digital app and website design landscape is polluted by trite work that displays no empathy for real people.

How blind people use an iPhone

You can learn more from the NCBI Virtual Technology Club podcast: SmartVision2 Mobile Phone (NCBI, n.d.-b), discussing a wide variety of smartphones and apps.

The podcast includes information on the AIRA (Glasses) subscription service, which enables visually impaired to access an assistant to describe what they are, “seeing”. Subscribers can call when transiting transport hubs or when using dispensing machine interfaces, etc.

Aira’s service is relatively expensive (CNBC, 2017) and human resource heavy – the right app might compensate?

Video: Aira (2018). AIRA used as an education assistant.

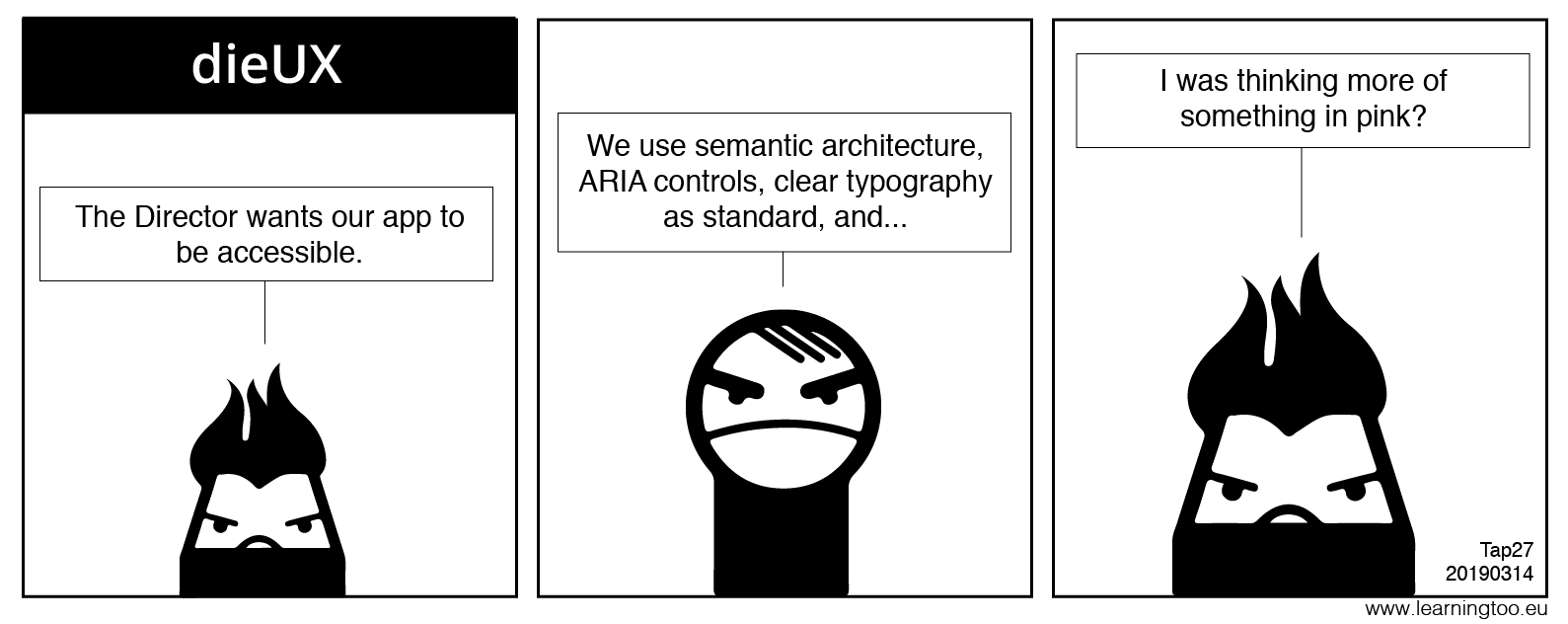

UX Designers’ responsibilities

There are seven layers to the internet (Dennis, 2007). Presentation is only one of them. Of course, to vision-biased designers, that’s all that counts and the engineers can do all the accessibility crap later. Codswallop.

User Experience designers must share responsibility for the accessibility of their digital product with the delivery team. It is an integral part of the User Experience.

We design in the HTML space and should have some appreciation of its accessibility toolkit – of universal design? It is a different paradigm to print. We all care deeply about usability and usability relies on good accessibility.

And our users’ abilities, environment, emotional state, etc. are all a part of their Universal Experience. Our enterprises’ values and standards, practices and customer services all affect the overall (universal) delight of our digital products.

User Experience is more than skin deep.

Fogg’s (2008) Diamond of Emotion applies here. Here’s a screen grab. Thanks Foggy.

More grist for the mill

Read more of my opinions on UX, Universal and user centered design and Selling UX Short with the 50% UI Role. Albeit, they are challenged and changing in areas during my studies and while broadening my skill sets such as across the mobile device app domains :).

Project proposal

Caveat: changes of mind. I am a student, after all!

The project aims to expand the usefulness and therefore the marketable value of devices like the tile, a lost and found item finder.

The tile’s unique selling point is its social network of devices that can attenuate lost items tagged with a Bluetooth tile, expanding the system’s usefulness beyond finding keys in the kitchen where you left them. And it doesn’t map your kitchen – only where your building is.

Different user needs

This project specifically aims to assist blind and more specifically, newly blind and visually challenged people to find their way about their home, work, or school/college spaces while improving the findability of tagged items for everyone.

Non-impaired users may identify benefits. Cognitive difficulties may be overcome with this app too? And not ignoring the handful of us who may mislay keys, devices, or even cars from time to time (ahem).

Our users

- Primary: newly blind; adjusting.

- Secondary: visually impaired.

- Tertiary: Anyone that finds it useful.

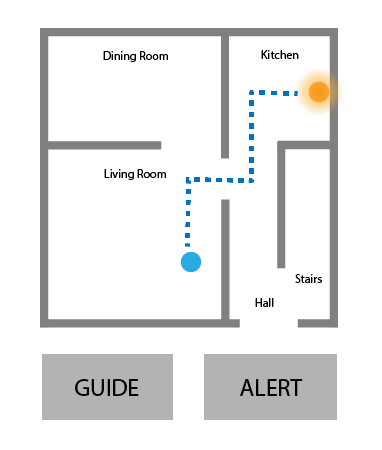

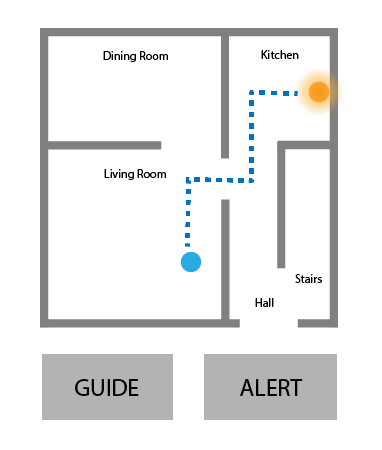

Concept

To give context to space and environment:

- An accessible app usable by a blind user.

- Log physical tags with the app

- Log spacial maps with the app, e.g. of the home, workplace, or school/college.

- Record where an item in proximity with our user, such as keys, is no longer with them, i.e. put down somewhere, or left behind.

- Combine indoor tracking technology and device sensors together with GPS and cell network triangulation strategies to provide a seamless experience.

- Enable push notifications and alternative access methods to our users in spaces: for example, a presentation, workshop, theater, etc.

Available technologies

There are more than a handful of non-GPS based technologies considered for indoor navigation including:

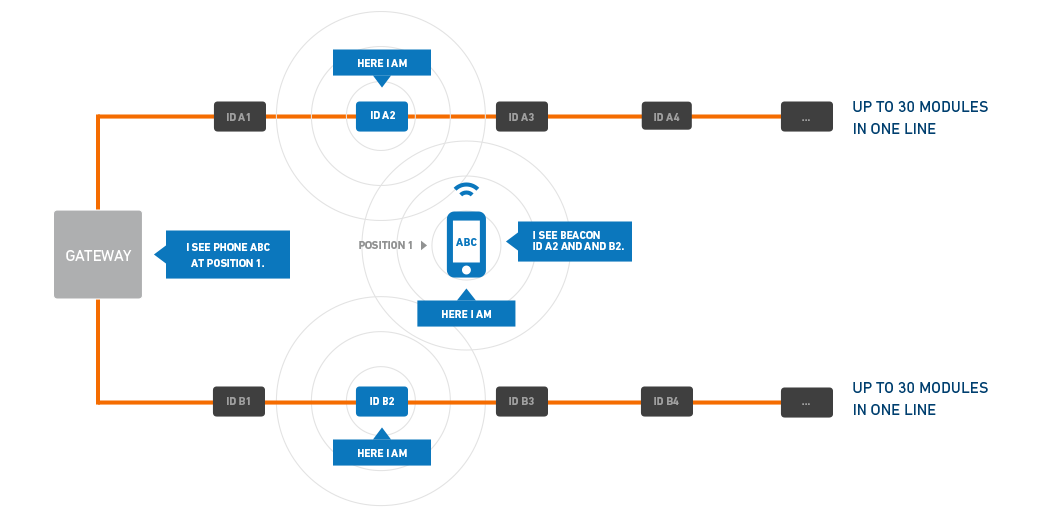

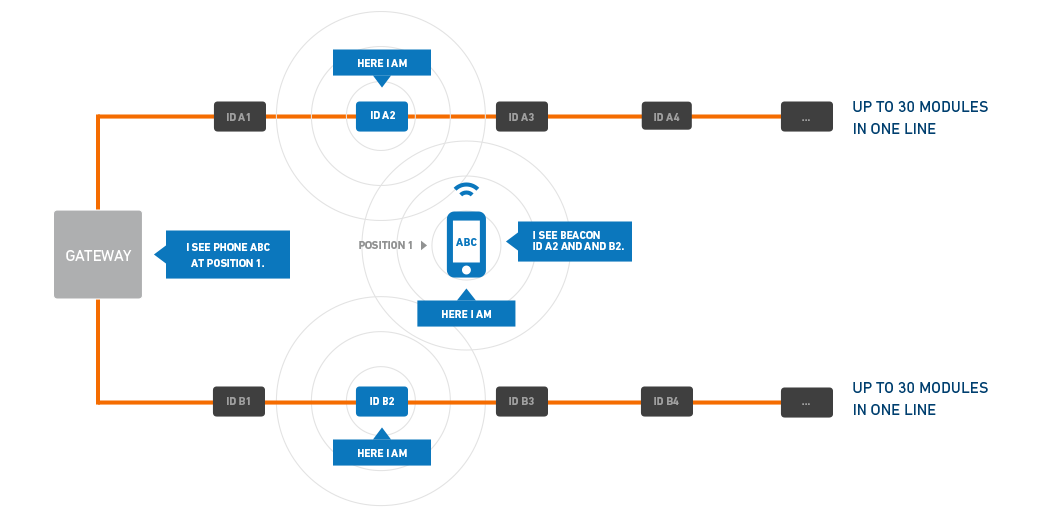

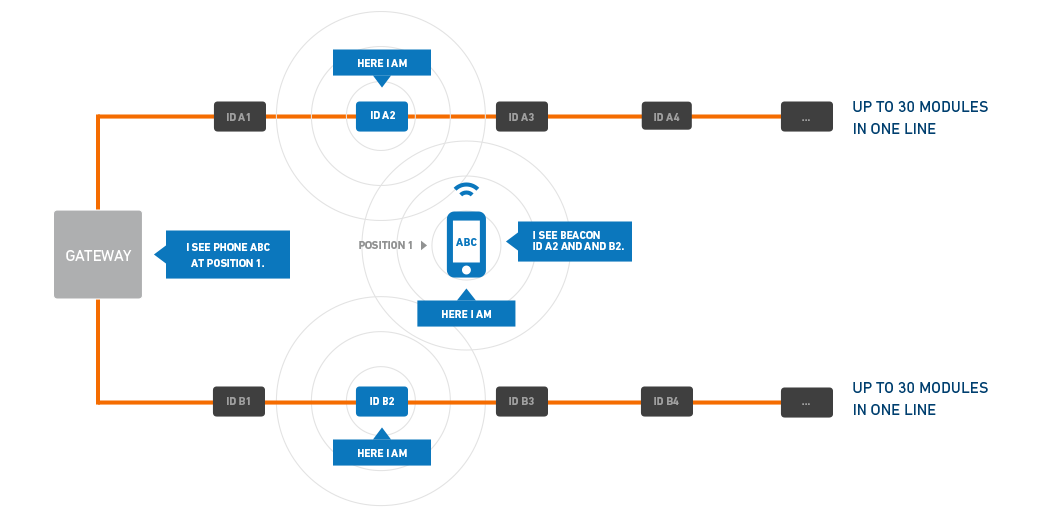

- iBeacon. An Apple integration of Bluetooth wireless emitters and RF Parametrics that interact with the phone and app. (Apple, n.d.)

- Bluetooth emitters as way-points. Similar to iBeacon and without the branding.

- Camera view. Using a series of photographs stored in a database with which to compare the current camera view.

- QR codes. Strategically placed QR codes could inform a device of its approximate to exact location using the camera sensor.

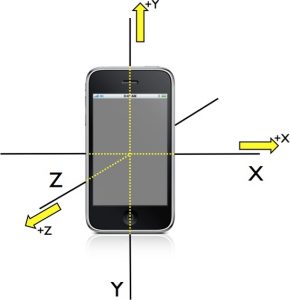

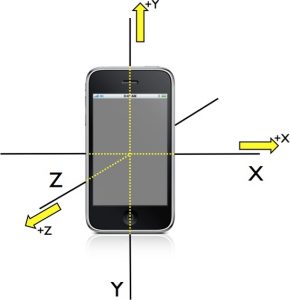

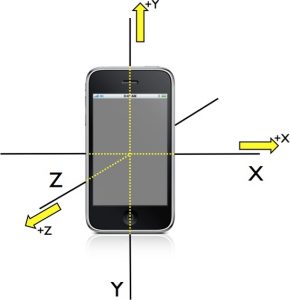

- Accelerometer and, or gyroscopes. These sensors detect motion and direction of travel. Without support of additional triangulation strategies the margin for error can become large over a short distance.

- Cell tower triangulation. In areas of high signal strength, cell signals may have sufficient penetration of buildings to enable triangulation of serving towers.

- Wifi access point triangulation. Using specifically engineered wi-fi access points, it is possible for receiver to detect the distance and angle from the access point; possibly also ascension.

- GPS. Ground Positioning System is not intended 100% accurate for security reasons. It may not prove even adequate within small areas such as domestic rooms. GPS signals also suffer distortion, reflection, refraction, and absorbing when interrupted by building materials.

- Magnetic field detection. Our domestic appliances and construction materials interact and deform with Earth’s natural magnetosphere, which sensors can detect and recognise.

- Voice recognition. Thru Siri (or alternatives, or in-app).

- Screen readers. Software and hardware (OCR) screen readers are available to “read” the screen.

- Translation services.

Video: World Wide Web Consortium (2019). Voice Recognition.

What the tile does

The tile device is detected by participating smartphones running the tile app. It emits Bluetooth signals.

The tile user can depress a button on the tile device to initiate an audible alert on, say a lost phone. Using the app, the tile user can locate their tiles on a map or, at closer quarters such as indoors, have the tile emit an audible alert.

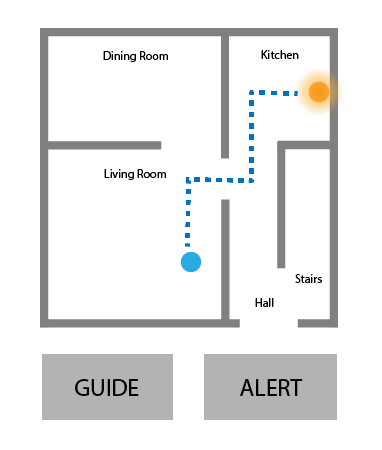

It does not enable navigation indoors or specify exactly where a mislaid item may be. For example, it does not say, “Your keys are in the kitchen on or by the table”.

Um. What’s the project device?

We need a working name for this concept. “MiFinder” was an initial thought and quickly dispelled by an Internet search along with “MiTab”, and a variety of other propriety apps and products.

The quality of the name is not important. A code is required.

i-cu. That’ll do. With the all important hyphen.

What the i-cu does

As already outlined above, the i-cu offers a range of utility. Key is the ability to keep a track of people and tagged items around the home using a combination of data and sensory information.

Our users can map their space: to move around the space and assist the i-cu to learn its way around landmarks input by its user. For example, “kitchen > kitchen table > my chair”.

Strategic positioning of optional Bluetooth iBeacon-style devices around the space opportune absolute positioning where other signal triangulation methods are needed and may fail – particularly with ascension through floors.

App interactions

- Scan QR code of device tabs.

- Screen tapping and gestures.

- Voice communication via Siri, for example (Apple extended use to third-party apps – see WikiHow) and Apple (2019) SiriKit

- Volume buttons.

- Device shaking and movement.

As we are interacting without vision, we can exploit existing Apple gestures (swipe, rotate, etc.), or even the accelerometer to enable tipping the device to indicate input or choice. (Caveat – not much of this about except in gaming).

Ambition

Being an inclusive app, i-cu could exploit the trend toward indoor navigation and marketing and offer downloadable floor plans of stores, museums, etc. for our user to follow paths and find areas and facilities. Integration into one app may benefit many more users of all abilities.

Giving navigable instructions

Apple iPhone and Watch hepatic feedback is exploited by Google Maps to indicate Left, Right, or straight on. Critics recall that by the time they have noticed a “tap” on their wrist or in their pocket, they have missed the opportunity to decode the instruction.

Offering verbal instructions may be an improvement and relies on a listening device and the capacity to listen to and to decode the instruction in good time. Tutor Stefan suggested a smartphone with independent hepatic feedback strips on the left and right. The drawback is needing a new smartphone.

An alternative may be to use tonal signals; a single tone for straight on and decreasing beeps (cadence and tone) as we deviate further left or right. As we are not decoding speech into instructions into actions, and only keeping to the main tone, this may be the least taxing strategy? This system is familiar to avalanche transceivers (Beacon Reviews, n.d.).

Visual users will see an arrow, compass, or gauge/meter. Whatever works, really. It needs thinking and testing.

The key requirement is inputting accurate way points during the mapping phase and protecting our users from malicious modification of third-party floor plans.

The choice and deign of tones could be VERY difficult. I anticipate:

- Move left

- Move right

- Turn left

- Turn right

- Go straight on

- STOP!

- Arrived

More about sounds from Khan Accademy (2014).

Plan of action

- Research and document our users.

- Research available technologies.

- Research accessible visual design (WCAG 2.0, for example)

- Research available solutions (beyond The tile)

- Design or update an interface.

- Create wireframes / prototype.

- Test.

How to test tones, etc?? Adobe XD has a limited voice capability… tones?

Summary

In draft… I’m thinking! Comment if you can help.

Reference this post

Godfrey, P. (Year, Month Day). Title. Retrieved , from,

References

AbilityNet. (n.d.) We are AbilityNet, Adapting Technology, Changing Lives. Retrieved March 14, 2019 from https://abilitynet.org.uk/

Apple. (n.d.). iBeacon. Retreived March 14, 2019, from https://developer.apple.com/ibeacon/

Apple. (2019). SiriKit. Retrieved March 20, 2019, from https://developer.apple.com/sirikit/

Aira. (n.d.) Your Life, Your Schedule, Right Now. Retrieved March 20, 2019, from https://aira.io/

Beacon Line. (n.d.). Beacon Line, open your wireless ideas. Retrieved March 15, 2019, from http://www.beacon-line.com

Beacon Reviews. (n.d.). Indicators. Retrieved March 20, 2019, from https://beaconreviews.com/indicators.php

Central Statistics Office. (n.d.). Census of Population 2016 – Profile 1 Housing in Ireland. Retrieved March 15, 2019, from https://www.cso.ie/en/releasesandpublications/ep/p-cp1hii/cp1hii/od/

CNBC. (September 20, 2017). Amazing electronic glasses help the legally blind see, but they are costly. Retrived March 20, 2019, from https://www.cnbc.com/2017/09/20/these-amazing-electronic-glasses-help-the-legally-blind-see.html

Colour Blind Awareness. (n.d). Colour Blindness. Retrieved March 14, 2019, from http://www.colourblindawareness.org/colour-blindness/

Denis, A. (2002). Networking in the Internet Age. New York, NY, USA: John Wiley & Sons, Inc.

Fighting Blindness. (n.d.). Eye Conditions. Retrieved March 14, 2019, from https://www.fightingblindness.ie/eye-conditions/

Fogg, B. [BJ Fogg]. (2008, October 28). BJ Fogg on Simplicity. [Video file]. Retrieved from https://vimeo.com/2094487

Health Service Executive. (October 25, 2018). Blind Welfare Allowance. Retrieved March 14, 2019, from https://www2.hse.ie/services/blind-welfare-allowance/blind-welfare-allowance.html

Health Service Executive. (n.d.). Colour vision deficiency. Retrieved March 14, 2019, from https://www.hse.ie/eng/health/az/c/colour-vision-deficiency/

Jernigan, K. (2005). A Definition of Blindness. The National Federation of the Blind Magazine for Parents and Teachers of Blind Children, 24(3). Retrieved from https://nfb.org/images/nfb/publications/fr/fr19/fr05si03.htm

NCIB. (n.d.-a.). Who we are and what we do. Retrieved March 15, 2019, from https://www.ncbi.ie/about-ncbi/who-we-are-and-what-we-do/

NCIB. (n.d.-b.). Virtual Tecnology Club, SmartVision2 Mobile Phone. [Podcast]. Retreived March 20, 2019, from http://www.ncbi.ie/virtual-technology-club-smartvision2-mobile-phone/

Microsoft. (n.d.). An inclusive Office 365. Retrieved March 20, 2019, from https://www.microsoft.com/en-us/accessibility/office?activetab=pivot_1%3aprimaryr2

RNIB. (n.d.). Registering as sight impaired. Retrieved March 14, 2019, from https://www.rnib.org.uk/eye-health/registering-your-sight-loss

Tile. (n.d.). How it works. Retrieved March 15, 2019, from https://www.thetileapp.com

World Wide Web Consortium. (2005, February). Introduction to Web Accessibility. Retrieved March 15, 2019, from https://www.w3.org/WAI/fundamentals/accessibility-intro/

World Wide Web Consortium. (2019). Voice Recognition. [Video File]. Retrieved from https://youtu.be/7RHG_XiQ0ck